Hello Interactors,

The social sciences sometimes unfairly get a bad wrap for being a ‘soft science’. But are they? In pursuit of a better understanding the role uncertainty plays in economic analysis, I stumbled across some research that ties John Maynard Keynes’s embrace of uncertainty with a resolute defense of the ‘soft sciences’ by one of the heroes of the ‘hard sciences.’ And you thought physics was hard.

As interactors, you’re special individuals self-selected to be a part of an evolutionary journey. You’re also members of an attentive community so I welcome your participation.

Please leave your comments below or email me directly.

Now let’s go…

CYBERSAIL

“The hard sciences are successful because they deal with the soft problems; the soft sciences are struggling because they deal with the hard problems.”1

This quote is by the groundbreaking Austrian American polymath, Heinz von Foerster from his essays on information processing and cognition. He went on to state:

“If a system is too complex to be understood it is broken up into smaller pieces. If they, in turn, are still too complex, they are broken up into even smaller pieces, and so on, until the pieces are so small that at least one piece can be understood.”

This strategy, he’s observed, has proven successful in the “hard sciences” like mathematics, physics, and computer science but poses challenges to those in the “soft sciences” like economics, sociology, psychology, linguistics, anthropology, and others.

He continues,

“If [social scientists] reduce the complexity of the system of their interest, i.e., society, psyche, culture, language, etc., by breaking it up into smaller parts for further inspection they would soon no longer be able to claim that they are dealing with the original system of their choice.

This is so, because these scientists are dealing with essentially nonlinear systems whose salient features are represented by the interactions between whatever one may call their “parts” whose properties in isolation add little, if anything, to the understanding of the workings of these systems when each is taken as a whole.

Consequently, if he wishes to remain in the field of his choice, the scientist who works in the soft sciences is faced with a formidable problem: he cannot afford to lose sight of the full complexity of his system, on the other hand it becomes more and more urgent that his problems be solved.”

Von Foerster studied physics in Austria and Poland and moved to the United States in 1949. He started his career in 1951 as a professor of electrical engineering at the University of Illinois. In 1958 he received grant funding from various federal government agencies to start a Biological Computer Laboratory.

Von Foerster understood the cognitive process humans use to break down large complex problems into smaller discrete linear steps. With the advent of computers, they then typed those instructions into punch cards and fed them into the computer to process. A linear process of which humans and computers can both do. He and his lab then devised a way for a computer to do something humans cannot – conduct multiple calculations at the same time by breaking them into smaller and smaller pieces “until the pieces are so small that at least one piece can be understood.” With that they invented the world’s first parallel processor.

While von Foerster helped to bring about a machine that could do what a human could not, they also discovered what a human can do that a machine cannot. Indeed, a parallel computer can break down and execute calculations across a network of instructions, but it can’t take in additional input from its environment and decide to adjust course depending on the nature of the results. It operates in a closed system with the information it has been given and with limited input.

I like the metaphor of sailing to better understand this. When I’m at the tiller of a sailboat steering with a course in mind, I must continually monitor the environment (i.e. wind speed, direction, tides, currents, ripples, waves), the sails (angles, pressures, sail shape, obstructions), the crew (safety, comfort, skill, attitude, joy, fear, anxiety) and the course and speed of the boat (too fast, too slow, tack, jibe, steer). I am using all my senses which continually input information as conditions change. My brain is making calculations and judgements resulting in decisions that in turn impact the conditions. For example, a sudden turn and the sails will fail, the water under the boat will be redirected, air and water pressure gradients will shift, and a crew member may fall or go overboard. All these shifts in conditions in turn impact my subsequent calculations and decisions instant by instant. It’s a persistent feedback loop of information created by human interactions with the boat, the crew, and with nature.

A computer cannot yet steer as a human would in such conditions. They lack the necessary level of sensory input from changing environmental conditions as well as judgement and control over the information these senses provide. The study of the information derived from these complex phenomena derives its name from the Greek word for “navigator”: κυβερνήτης (kubernḗtēs), or as it has come to be called – Cybernetics. How we got from ‘kuber’ to ‘cyber’ I’m not sure, but I have a hunch that is about to be revealed.

KEYNESIAN BRAIN CHAIN

One of the founders of Cybernetics in the 1940s, Norbert Weiner, defined it as “the entire field of control and communication theory, whether in the machine or in the animal.” Other founders said it is the study of “circular causal and feedback mechanisms in biological and social systems." Another member of the founding group, the influential cultural anthropologist Margaret Mead, said it’s "a form of cross-disciplinary thought which made it possible for members of many disciplines to communicate with each other easily in a language which all could understand."

Von Foerster’s seminars in Cybernetics grew to be very popular at the University of Illinois in the 1960s and 70s. But these early adopters were not the first to use this term to describe complex social information exchanges creating causal feedback loops. In 1834 the French mathematician, inventor of the telegraph, and namesake of the electrical current measurement Amp, André-Marie Ampère, used the term cybernétique to describe the “the art of governing or the science of government.” Perhaps that’s how we got from ‘kuber’ to ‘cyber’.

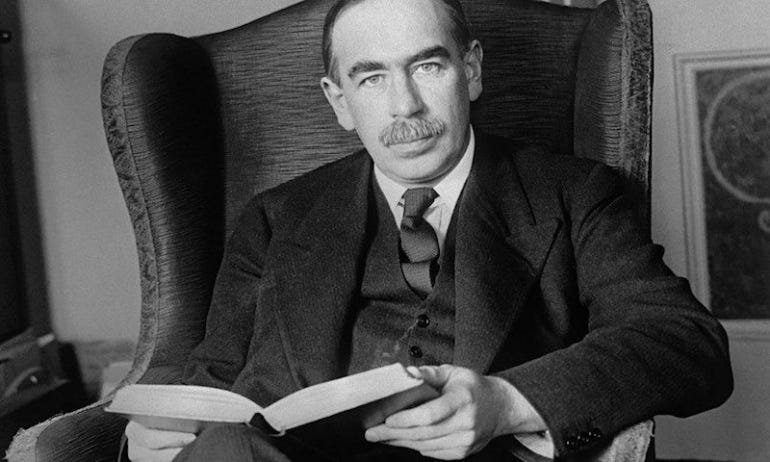

Either way, whether it’s political science, economics, or other social sciences of so-called “soft sciences” these early cross-discipline thinkers felt the urge to find ways to solve hard problems. Problems so complex they become impossible to deal with or track – they become intractable. One economics professor emeritus out of the University of Versailles Saint-Quentin-en-Yvelines, Robert Delorme, encountered these intractable problems in his work. He has since sought ways to establish a framework to deal with such problems that draws on the work of von Foerster. But also, on someone we mentioned last week, the famous British economist John Maynard Keynes.

Delorme was studying institutional patterns in public spending between Great Britain and France over long time periods. This yielded a great deal of quantitative data, but also qualitative data including behavioral differences between how governments and markets interacted with each other and within each country. Delorme also studied traffic fatality data between the two countries and hit the same challenge. While there were mounds of quantitative data, the qualitative data was quite specific to the country, their driving cultures, the individual accident circumstances, and the driver’s individual behavior. In trying to break these complex problems down into smaller and smaller pieces, he hit the dilemma von Forester spoke of. The closer he got understanding the massive mound of data in front of him, the further from his initial research economic question he got.

To better model the uncertainty that culminated from behaviors and interactions in the system Delorme turned to the tools of complexity economics. He considered real-world simulation tools like complex adaptive systems (CAS), agent-based computational economics (ACE), agent-based models (ABM), and agent-based simulation (ABS). But he realized this tool-first approach reminded him of the orthodox, or ‘classical’ style of economic inquiry Keynes was critical of. While he recognized these tools were necessary and helpful, they were insufficient at explaining the complexity that arises out of the events in “the real world”.

Delorme quotes Keynes from his 1936 book, The General Theory of Employment, Interest, and Money where he recognizes Keynes’s own need to break complex problems into smaller and smaller pieces while still staying true to the actual problem. Keynes acknowledged, “the extreme complexity of the actual course of events…” He then reveals the need to break the problem down into “less intractable material upon which to work…” to offer understanding “to actual phenomena of the economic system (…) in which we live…”

According to Delorme, Keynes, his economic philosophy, approach, and writings have been criticized over the years for lacking any kind of formalization of the methodologies he used to arrive at his conclusions and theories. So, Delorme did the work to comb through his writing to uncover an array of consistent patterns and methodological approaches which he’s patched back together and formalized.

He found that Keynes, like a helmsman of a boat, adapted and adjusted his approach depending on the complexity of the subject matter provided by the economic environment. When faced with intractable problems, he applied a set of principles and priorities Delorme found useful in his own intractable problems. The priority, he found, was to take a ‘problem first’ approach by confronting the reality of the world rather than assuming the perfect conditions of a mythical rational world common in traditional economics.

Again, using sailing as a metaphor, imagine the compass showing you’re heading north toward your desired destination, but the wind is to your face and slowing you down. It’s time to decide and act in response to the environmental conditions. Disregard the tool for now, angle the boat east or west, fill the sails, and zig zag your way toward your northerly goal while intermittently returning to the tool, the compass.

What Delorme found next was Keynes’s embrace of uncertainty. Instead of finding comfort in atomizing and categorizing to better assess risk, Keynes found comfort in acknowledging the intricacies of the organic interdependence that comes with interactions within and among irrational people and uncertain systems and environments. He rejected the ‘either-or’ of dualism and embraced the ‘both-and’ open-endedness of uncertainty. In other words, when there is a sudden shift in wind direction, the helmsperson can’t either ram the tiller to one side or adjust the sails. They must both move the tiller and adjust sails.

REPLICATE TO INVESTIGATE

To better deal with complex phenomena, and to further form his framework for how to deal with them, Delorme also found inspiration in the work of one of my inspirations, Herb Simon.

What Delorme borrowed from Simon was a way “in which the subject must gather information of various kinds and process it in different ways in order to arrive at a reasonable course of action, a solution to the problem.”2 This process, as characterized by the cybernetic loop, takes an input by gathering information and assesses and decides on a reasonable course of action. This solution in turn causes a reaction in the system creating an output that is then sensed and returned into the loop as input. This notion of a looping system made of simple rules to generate variations of itself is reminiscent of the work by a third inspiration for Delorme, John von Neumann.

Von Neumann was a Hungarian American polymath who made significant contributions to mathematics, physics, economics, and computer science. He developed the mathematical models behind game theory, invented the merge-sort algorithm in computer science, and was the first known person to create self-replicating cellular automata. And for all you grid paper doodlers out there, he first did it first on grid paper with a pencil. Now these simple processes are done on the computer.

By assigning very simple ‘black and white’ rules to cells in a grid (for example, make a cell white or black based on whether neighboring cells are black or white) one can produce surprisingly complex animate and self-replicating behavior. One popular example is Gosper’s gliding gun. It features two simple cellular arrows that traverse back and forth left to right across the screen on a shared path. When they collide, they produce animated smaller and simpler cellular offspring, an automaton, that rotate as they animate themselves diagonally to the lower right corner of the page or screen.

Delorme noticed von Neumann used this self-replication phenomena to describe a fundamental property of complex systems. If the complexity of automata is under a certain threshold of complexity, the automaton it produces will be less complex or degenerative – as is the case with Gosper’s arrow. However, if the threshold of complexity is exceeded it can over produce. Or, in the words of von Neumann, “if properly arranged, can become explosive.”3

What Delorme’s research suggests, I think, is that to address complex intractable economic problems one must devise a looping recursive system of inquiry that self-replicates output intended to affect the next decision by the researcher. This makes the researcher both an observer and a participant in the search for solutions. The trick is to maintain a certain threshold of complexity such that the output doesn’t, again, become overwhelming or explosive.

In other words, instead of pointing tools at a mound of data in attempts to describe a static snapshot of what is in the world, create a circular participatory system that recursively produces something that affects how one might adjust what it produces in near real time.

As Delorme writes, “Complexity is not inherent to reality but to our knowledge of reality, it is derivative rather than inherent.” He then quotes science philosopher Lee McIntyre, who offers, “complexity exists ‘not merely as a feature of the world, but as a feature of our attempts to understand the world.”4

I’m not sure what this kind of system looks like practically speaking, but I think the software tool developed by the economist Steve Keen, Minsky, is a start. Keen created this dynamic simulation software to model approaches to macroeconomics after he predicted the 2008 financial crisis. He hopes to entice people away from the static, equilibrium-fixated style of economics taught and practiced today.

The amount of data available to dynamically assess economic outcomes involving complex human behavior, human-made systems, and the natural world continues to push thresholds of complexity. We are creators, observers, and interactors of information in our own self-perpetuating recursive constructions of reality. But as von Forester suggested, even as we break down complex problems into parts, we can’t lose sight of the whole.

That reminds me of a quote from another ‘von’ the linguist and philosopher Wilhelm von Humboldt – the younger brother of the famous naturalist Alexander von Humboldt. In 1788 he wrote,

"Nothing stands isolated in nature, for everything is combined, everything forms a whole, but with a thousand different and manifold sides. The researcher must first decompose and look at each part singly and for itself and then consider it as a part of a whole. But here, as often happens, he cannot stop. He has to combine them together again, re-create the whole as it earlier appeared before his eyes."5

Understanding Understanding: Essays on Cybernetics and Cognition. Heinz von Foerster. 2003.

A Cognitive Behavioral Modelling for Coping with Intractable Complex Phenomena in Economics and Social Science: Deep Complexity. Economic Philosophy: Complexities in Economics Keynote Address. Robert Delorme. 2017.

Ibid

Ibid

Vitalizing Nature in the Enlightenment. Peter H. Reill. 2005.

Share this post